If you don’t mind about the story behind this, you can scroll down to the section with command lines!

Introduction

I bought a Mac Mini machine in 2006. It was equipped with a Core Duo processor at 1.66 GHz, 512 MB of RAM, a 60 GB hard drive, and an Intel 945GM GPU chipset. This machine could be upgraded (not easily, but that was still possible, except for the GPU chipset), so over time, it got:

- an Intel Core 2 Duo T7600 processor (the most capable CPU compatible with the motherboard)

- 2 RAM modules of 2GB

- a 64GB SSD

I did not upgrade the operating system (Mac OS 10.4 “Tiger”); instead I installed Ubuntu quite soon. At first, it was ubuntu version 6.06 (codename “The Dapper Drake”), and of course I upgraded it over time. And guess what? I am still using this computer, at home. It boots fast, it is still quiet, and it works really well for what I do with it: mostly internet browsing, and for the times when I work from home, software development.

The main limitation of this old machine is the fact it cannot support a 64-bit operating system. Even if the upgraded processor has a 64-bit instruction set, the rest of the system cannot support it. And nowadays, it starts to complicate things a little.

Another limitation due to the motherboard is the fact it cannot handle this amount of RAM. The operating system reports 3G instead of 4G. However, 32-bit operating systems consume less memory, so 3G is still very acceptable for my usage.

About Ubuntu OS versions and upgrades

Every 2 years, Canonical company releases a new Ubuntu LTS version (Long-Term-Support). These LTS versions are supported for 5 years. At the time of this writing, latest LTS version is Ubuntu 18.04 (as one may guess, “18.04” means “april 2018”), and next one will be released soon (version “20.04”).

Canonical company releases other versions every 6 months, but these are not supported for a long time, so using them requires to upgrade every 6 months to continue getting security updates.

Obviously, the OS allows to upgrade itself without having to reinstall the machine from scratch. However, upgrading such an old machine may lead to problems: probably nobody (except me!) tests the newest OS versions on such an old machine…

In order to test a version before actually performing the upgrade, one may download the iso image of the new version, and use it in “live mode”: run the OS from the USB device, without installing it. But since Ubuntu 18.04, Canonical no longer provides 32-bit iso images.

When the problem arose

In early may 2018, when the new LTS version was just released, I decided to upgrade my mac mini. At this time, it was installed with previous LTS, 16.04. The upgrade process went well, but after restart, I got some issues related to GPU handling. First, there was a very long delay on boot; that could be fixed just by adding a kernel parameter. Secondly, the GPU driver was apparently not correctly loaded. When showing the “Details” menu entry of the parameters window, the GPU driver displayed was “llvmpipe”. This means that software rendering was enabled, as a fallback. The “llvmpipe” software rendering stack is probably very capable on a new machine, but when using a CPU of 2007, software rendering is obviously not a good solution: the windowing system appeared much slower than before.

I looked at various forum posts but could not fix this issue. So I finally decided to re-install ubuntu 16.04. With 5-years support and security updates, I could still use this mac mini up to april 2021, which was still remarkable.

And now…

Recently, a forum post led me to think my problem might be fixed by a recent update in “mesa” GPU handling library. Two or three months ago, this was integrated into Ubuntu 18.04 package updates. Then, my goal was to retest this updated version. But, if ever it failed again, I would have to reinstall the machine again with version 16.04, from scratch! So I needed a way to test this updated version without installing it on the machine. In other words, I needed a “live” version of Ubuntu 18.04. And, since Canonical no longer provides 32-bit versions of iso images, I decided to build my own 32-bit Ubuntu 18.04 live OS, as shown below.

Building a 32-bit Ubuntu 18.04 live OS, my way

You will need a quiet large amount of free space: around 10 gigabytes. You can run this procedure on a 32-bit or 64-bit Ubuntu computer.

First, let’s create a chroot environment containing a minimal operating system base:

$ MIRROR=http://ch.archive.ubuntu.com/ubuntu/

$ debootstrap --arch i386 --variant minbase bionic bionic-i386 $MIRROR

Notes:

debootstrapis the very classical command to build such a chroot environment.bionicis the codename for Ubuntu 18.04.--arch i386allows us to indicate we target a 32-bit architecture.--variant minbaseallows us to specify we want a minimal environment at this step.bionic-i386is the name I gave to the output directory generated.

The reason we chose variant minbase is that debootstrap is not as smart as a real package manager, so if one tries

to solve complex dependencies at this step (by chosing another variant, and using option --include to select more

packages to be installed), it will probably fail.

Before entering this chroot environment, let’s make sure /proc, /dev, /sys or /dev/pts

will be available, otherwise some commands may fail.

$ mount -o bind /proc bionic-i386/proc

$ mount -o bind /sys bionic-i386/sys

$ mount -o bind /dev bionic-i386/dev

$ mount -o bind /dev/pts bionic-i386/dev/pts

Then we are ready to enter the chroot environment:

$ chroot bionic-i386

[chroot]#

First let us install a text editor and the locales package.

Setting up a correct locale will allow us to clear many verbose warnings in the following commands.

[chroot]# apt update && apt install vim locales

[chroot]# vi /etc/locale.gen # uncomment the locale you want, mine is fr_FR.UTF-8

[chroot]# locale-gen

Generating locales (this might take a while)...

fr_FR.UTF-8... done

Generation complete.

[chroot]# echo LANG=fr_FR.UTF-8 >> /etc/default/locale

[chroot]#

Next, we shall modify the configuration of source repositories, to allow security updates,

regular updates, and restricted repository section.

[chroot]# cat << EOF > /etc/apt/sources.list

deb http://ch.archive.ubuntu.com/ubuntu bionic main restricted

deb http://ch.archive.ubuntu.com/ubuntu bionic-updates main restricted

deb http://security.ubuntu.com/ubuntu bionic-security main restricted

EOF

[chroot]#

We can now upgrade the whole OS to latest package versions:

[chroot]# apt update && apt upgrade

[chroot]#

Now, we need to turn this minimal environment (minbase) into a much more complete OS:

- we want the default graphical desktop (package

ubuntu-desktop) - we need proper network management (this is mostly ready after installation of

ubuntu-desktop, but we need to add packagenetplan.iofor proper network setup on boot) - while we are at selecting packages, we can add some more that will be needed later in this process:

linux-image-genericfor the linux kernel, andlvm2(logical volume management). (Anyway, if we omit these packages at this step, the image construction tool we will use later would add them automatically.)

[chroot]# apt install netplan.io ubuntu-desktop lvm2 linux-image-generic

[chroot]#

Note: when the package manager warns about the fact grub will not be installed on any device,

you can safely answer that this is not a problem.

Apparently, the default configuration for netplan networking tool is not installed with its package.

So let’s create this configuration file (I took the one of another system already installed).

[chroot]# cat << EOF > /etc/netplan/01-network-manager-all.yaml

# Let NetworkManager manage all devices on this system

network:

version: 2

renderer: NetworkManager

EOF

[chroot]#

We can also define a root password now:

[chroot]# passwd

And we are done with system customization:

[chroot]# exit

$ umount /dev/pts /dev /sys /proc

One last thing: the DNS configuration that was working on this build machine may not work on the target machine we want to bootup. So let’s remove it. The target OS should find its config alone.

$ rm bionic-i386/etc/resolv.conf

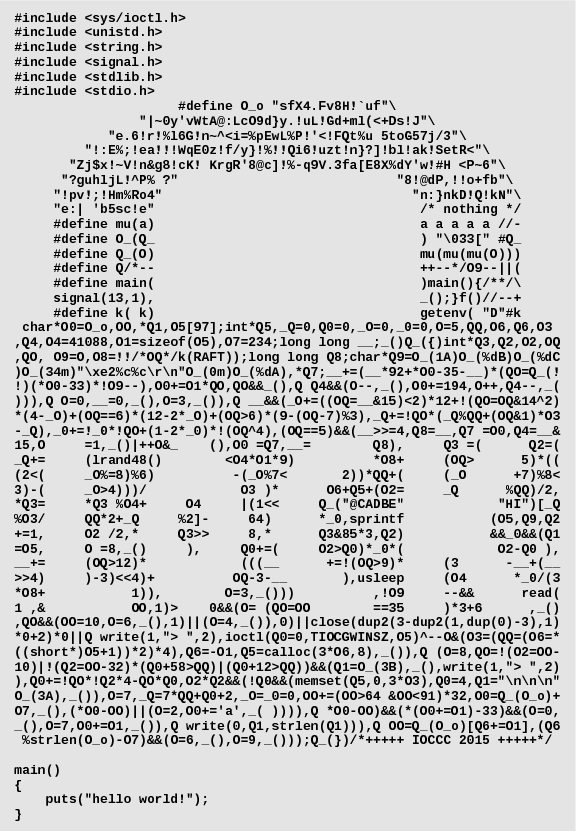

To turn the chroot environment into an image that can be flashed on a USB device, we can use a tool I developed

called debootstick. It is available in Ubuntu (repository section

universe) and Debian, but if you want support for newest operating system versions, you may prefer to install

it from the github repository.

$ debootstick --config-kernel-bootargs "+console=ttyS0" bionic-i386 bionic.dd

Notes:

--config-kernel-bootargs "+console=ttyS0"is optional, it allows to ease debugging at next step (see below).- Generated file

bionic.ddis our generated USB image. It is quite large (3.4G). This is becausedebootstickdoes not create compressed images like standard Ubuntu iso images. Instead, the OS is “installed” inside the USB image the same as it would be installed on the target disk. Thanks to this design choice, linux kernel or bootloader package updates work fine, whereas they fail on official images. This makesdebootstick-generated images usable on the long term. Check this for more info.

Before dumping the image to a USB device, we can test it with kvm:

$ cp bionic.dd bionic-test.dd

$ truncate -s 16G bionic-test.dd # simulate a copy on a larger drive

$ kvm -drive file=bionic-test.dd,media=disk,index=0,format=raw -m 4G -serial mon:stdio \

-device virtio-net-pci,netdev=user.0 -netdev user,id=user.0

Notes (more info here):

- I ran this on a powerful computer.

- I allocated 4G RAM for this virtual machine.

-serial mon:stdioallows to get a command line shell (multiplexed with qemu monitor), useful in case of problems.- The remaining options allow the virtual machine to get internet connectivity.

The virtual machine should run well. You should get first login configuration screens: user name and password, time zone, etc. Once logged into the graphical session, you can observe that the GPU driver is “llvmpipe” (software rendering). This is because kvm does not provide proper 3D GPU emulation in this configuration. But if the host CPU is powerful enough, the desktop can still be quite reactive.

We can now dump the image to our USB device:

$ dmesg -w # then, plug in your USB device, to be sure about its name /dev/sd<X>

[...]

^C

$ dd if=bionic.dd of=/dev/sd<X> bs=10M conv=fsync

And, finally, reboot the target machine with it!

Conclusion

Thanks to this 32-bit live USB image, I could verify that this updated version of Ubuntu 18.04 would work fine on my mac mini. The parameters window was displaying the expected GPU driver (‘Intel 945GM’ instead of ‘llvmpipe’ as before). Therefore I knew I could safely start the upgrade procedure. The upgrade went well and I found no issues since the upgrade. My old mac mini is as reactive as before. With security updates for this version ending on april 2023, I suppose this old computer from 2006 can probably work very well up to this time! (or later, if 20.04 version works as well…)

If you are interested, be aware that debootstick can generate images for a raspberry pi, and it can also

work with other tools (e.g. docker instead of debootstrap). For instance

this example shows

how easily you can turn a docker image into a bootable image for a raspberry pi.